The Use of Questionnaires in the Journal of Research in Music Education: 2003-2013

Jared Rawlings, Stetson University

Abstract

Challenges with the use of questionnaires as a data collection tool in social science research have been regularly reported within the field of survey methodology; however, researchers have not investigated the use of questionnaires in music education. The purpose of this paper was to explore the use of questionnaires as a data collection tool in the Journal of Research in Music Education (JRME) during the past 10 years (2003-2013) of publication. Studies (N = 45) from the JRME were systematically analyzed using characteristics of high quality, rigorous survey research documented from the extant literature on survey methodology. Primary findings revealed that inconsistencies exist with reporting method and results sections of music education research using questionnaires as a primary data collection tool. Also, most studies published in the JRME between 2003-2013 conform to the established standards from social science research for survey methodology. Secondary findings demonstrate that post-secondary and adult populations are frequently sampled in research utilizing questionnaires. Suggestions and new directions for the future research of questionnaires in music education are discussed.

Keywords: questionnaires, survey, music education

The Use of Questionnaires in the Journal of Research in Music Education: 2003-2013

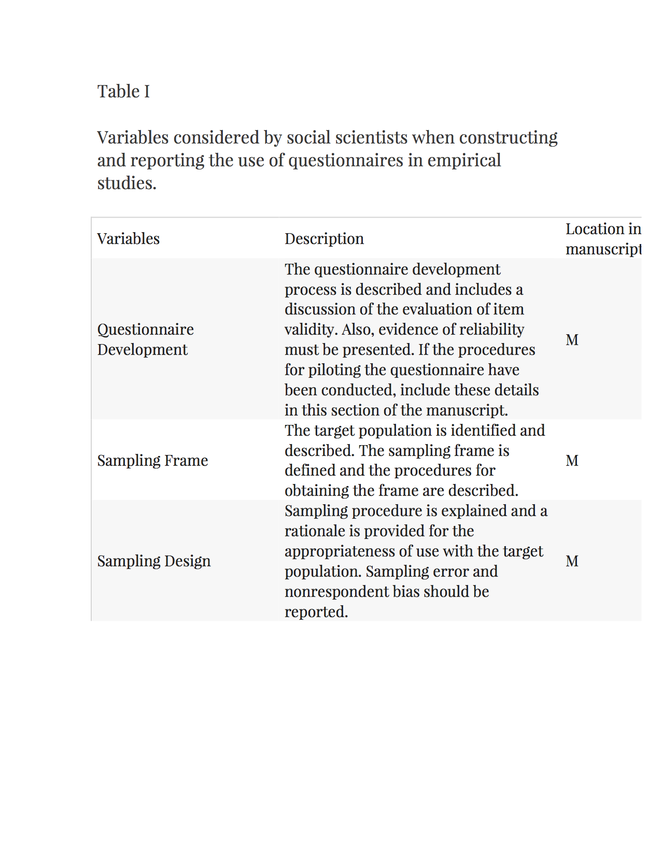

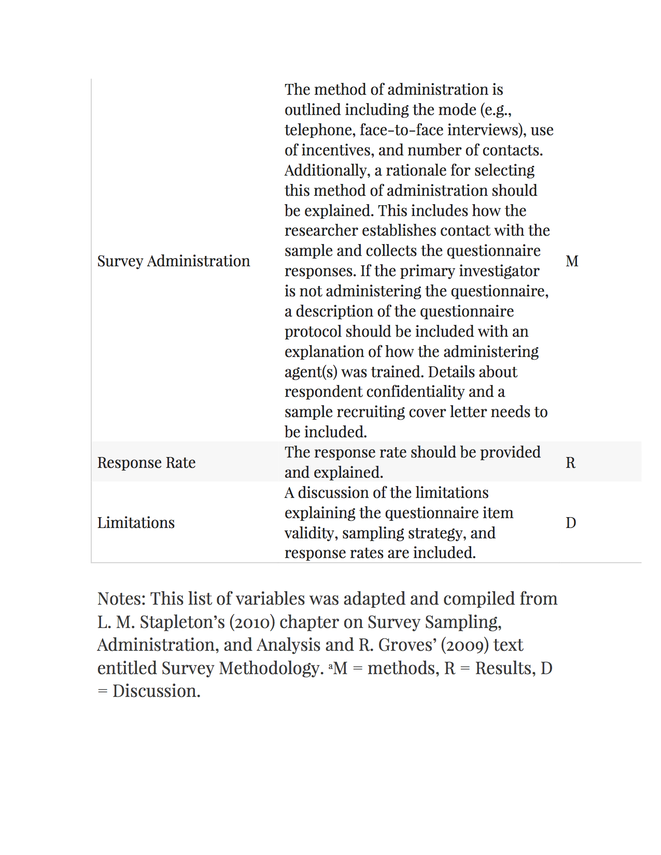

The field of survey methodology regularly reports challenges regarding the use of questionnaires as a data collection tool in social science research (de Leeuw, Hox, & Dillman, 2008; Groves, 2009; Stapleton, 2010). de Leeuw and colleagues (2008) recognize that, “all surveys face a common challenge, which is how to produce precise estimates by surveying only a relatively small proportion of the larger population” (p. 2). Research in music education utilizing questionnaires shares similar issues with social science research; thus, the purpose of this paper was to explore how studies published in the Journal of Research in Music Education (JRME), that use questionnaires as a data collection tool, conform to the expectations established by the social science research community. This paper reviews the literature on survey methodology in the social sciences to determine the characteristics of high quality, rigorous research specifically focusing on questionnaires that are utilized in quantitative descriptive studies. Next, the paper describes the topics, populations and procedures reported in the JRME during the past 10 years (2003-2013) of publication and analyzes the music education research based on the criteria established for questionnaire research in the first section of the study. This section of the paper presents a specific critique regarding the construction of questionnaires and the process of using questionnaires in music education research. A discussion of future directions and recommendations concludes the paper while presenting a broad critical argument for the adoption of standardized procedures to address the methodological challenges of using questionnaires as a data collection tool in survey research (See Table I).

Questionnaire Development, Sampling, and Administration in the Social Sciences

Researchers consider three critical components when developing questionnaires for high quality, rigorous survey research (de Leeuw et al., 2008). Broadly, these three components relate to how questionnaires are utilized both in their construction and methodological process. First, evidence of questionnaire construction or development is needed (Groves, 2009). A well-developed questionnaire has common characteristics that are agreed upon by social science researchers. Good examples of questionnaire development demonstrate strong reliability (e.g., respondents understand the questions consistently) and construct validity (e.g., measurement relates to other theoretically-driven measures) (de Leeuw et al., 2008). Reliability and validity are established standards in the social science research tradition that measures how well a question performs. To increase the clarity and understandability of the measure, a questionnaire should be evaluated prior to a piloting procedure. Groves (2009) described tools to evaluate questions and response items in questionnaire development. For example, researchers in the social sciences may use focus groups, cognitive interviewing[1], and protocol analysis to evaluate potential questions and response items to reduce measurement error (Dillman, 2008; Fowler & Cosenza, 2008; Groves, 2009). Furthermore, Groves described methods of measuring reliability including internal consistency, test-retest, alternative form, and split-halves. The final characteristic of a well-constructed questionnaire is a procedure known as pilot testing or pre-testing. Pilot testing is conducted by administering the questions to a similar but smaller sample that may not be used in the actual study. de Vaus (1995) revealed that social science researchers “sometimes avoid pilot testing by including a large number of indicators in the study and only using those which prove to be valid and reliable” (p. 54). Survey methodologists recommend pilot testing between 75 to 100 respondents to confirm the original theory or research question guiding the investigation (de Vaus, 1995).

Second, issues of population and sampling exist in social science research and require consideration for high-quality, rigorous survey research (Dillman, 2002; Dillman & Christian, 2005; Stapleton, 2010). Specification of a population for generalization is an established practice from the social science research community. It is from this target population that the sampling frame is theorized, designed and developed. Probability sampling is an appropriate type of sampling design, recognized by social science researchers, for population generalization. Other probability sampling designs identified by social science researchers include systematic sampling, stratified sampling, multistage sampling, and cluster sampling. When random sampling is not used as a way of framing the sample for a study, sampling error increases (Stapleton, 2010). As a part of the broader methodological process associated with utilizing questionnaires, challenges with population and sampling may affect the interpretation of the data.

Third, the procedures of questionnaire administration should be a consideration of researchers. Methods of questionnaire administration have been identified to influence the questionnaire non/response rate and measurement error (de Vaus, 1995; de Leeuw et al., 2008). Single modes of questionnaire administration include contact (e.g., group self-administered questionnaires, researcher-administered questionnaires) and non-contact (e.g., mailed questionnaires, computer-assisted questionnaires). Popular methods of collecting responses include pencil-paper booklets and internet-based survey designs. International survey methodologists have also encouraged multimodal techniques of administering questionnaires (e.g., combinations of contact and non-contact modalities); however, these techniques are often cost prohibitive (de Leeuw et al., 2008). The number of contacts or attempts by the researcher to collect responses also influences the questionnaire non/response rate and measurement error. For example, in educational research, students may not be in school on the day a school-delivered questionnaire is given. Based on one trial of data collection, the administering agent may have a choice to return to the population to contact those individuals who did not take the questionnaire the first time. Finally, the issue of confidentiality may be an issue for respondents. Stapleton (2010) contended that anonymous questionnaires “… [yield] less bias under some contexts” (p. 402). However, promising confidentiality and anonymity to participants does not eliminate bias from an investigation. Stapleton (2010) contended, “… response rates less than 50% should not be approved” by reviewers of journals in the social sciences (p. 403). Besides attaining an acceptable response rate, social science researchers insist that justification for non-response be acknowledged. For example, researchers are to determine if non-response to items on the questionnaire are random or predictable based on the question (de Leeuw et al., 2008). Also, researchers must report issues influencing possible non-response. Groves (2009) suggested the following categories for influences of non-response: poor development of survey protocol; inconsistent interviewer training; social climate of environment; and personal characteristics of the respondent. Other influences that may affect non-response are poorly written cover letters and low participant motivation. Incentives are occasionally used to motivate participant response. Overall, care with planning the administration of a questionnaire is likely to improve survey response rates.

Questionnaires in Music Education Research

Using questionnaires in the social sciences is a popular method for collecting data from a population sample; however, issues of questionnaire development, sampling, and administration have long been concerns of researchers (Stapleton, 2010). One such issue is the clarification in defining the terms survey and questionnaire. There are many definitions of the word survey and how to use it. The noun survey is an activity in which people are asked a question or series of questions to gather information about behaviors or perceptions of people (Merriam Webster, May 3, 2014). Similarly, the verb survey is to ask or inquire a question or series of questions to gather information. The difference between these two definitions centers on the word’s intended use. Technically, questionnaires are tools utilized to collect data in surveys (Stapleton, 2010). This critical inquiry is dedicated to examining the use of questionnaires in music education research.

Researchers in music education have conducted content analysis investigations to explore trends of the field (Schmidt & Zdzinski, 1993; Yarbrough, 1984, 2002). Yarbrough (2002) analyzed 50 years of publications appearing in the JRME and presented descriptive data including frequencies about research methodologies and acceptance rates. In another analysis, Miksza and Johnson (2012) examined the theoretical frameworks applied in music education research published in the JRME from 1979-2009. This analysis utilized a systematic review process including intercoder agreement to increase reliability of the study’s results. The researchers found a large number of distinct theoretical frameworks (144) used in these studies with Leblanc’s Interactive Theory of Music Preference highlighted as the most commonly cited theory (30 studies). Content analyses are useful to all researchers, but few researchers have incorporated critical inquiry to examine music education research. One method of critical inquiry is a retrospective survey to investigate a question across bodies of research to guide future research and reporting practices. Overland (2014) critically examined the statistical power and Type II error rates of publications found in the JRME from 2000 to 2010. Preliminary results are only available for this investigation and demonstrate that the sampled studies often do not have sufficient statistical power to detect a meaningful effect. Systematic reviews of published research provide valuable information about the status of research and may isolate areas for future research in social sciences (Hobson & Sharp, 2005).

The JRME has been recognized as a major publication for music education with several researchers conducting field surveys of select topics (Miksza & Johnson, 2012; Overland, 2014; Schmidt & Zdzinski, 1993; Yarbrough, 1984, 2002). Miksza and Johnson (2012) offered a well-developed rationale for selecting the JRME as a topic of study because of the publication’s impact on the global community of music education researchers. Moreover, according to Sims (2014), JRME is a select publication with a 17-18% acceptance rate and worldwide readership. Because of its scope and purpose, the JRME is considered an acceptable source for exploring the use of questionnaires in music education research studies published between 2003 and 2013.

The use of questionnaires as a data collection tool in survey research can be methodologically complicated for researchers in the social sciences and misunderstandings about their use in research may persist through publication. Issues with the construction of questionnaires and methodological procedures have prompted survey methodologists to publish standardized procedures for using questionnaires (de Leeuw et al., 2008; deVaus, 1995; Dillman, 2000, 2002; Groves, 2009; Stapleton, 2010). Descriptive analyses are needed in music education to understand the construction of questionnaires and methodological procedures associated with this data collection tool in empirical investigations. Therefore the purpose of this study was to explore how studies published in the Journal of Research in Music Education (JRME), that use questionnaires as a data collection tool, conform to the expectations established by the social science research community.

Method

Sampling Procedure

This section describes elements of the critical and systematic research review process, including the method for selecting and categorizing papers included in this review. Helmsley-Brown and Sharp (2003) describe a systematic review as: (a) addressing specific research questions; (b) documenting the methodology for a literature search process; (c) establishing criteria for literature inclusion and exclusion based on the scope of the review; and (d) provide a clear presentation of the findings. To complete this process, Fink (2005) suggested seven stages for systematic reviews:

- Selecting research questions

- Selecting the bibliographic or article databases, websites and other sources

- Choosing search terms

- Applying practical screening criteria

- Apply methodological screening criteria

- Conducting the review

- Synthesizing the review (pp. 3-5).

A key word search was conducted in the SAGE full-text for JRME. The following keyword terms were selected to identify articles for review: survey and questionnaire. After screening 147 titles and abstracts, 74 papers were identified for review on the following initial inclusion criteria:

- Published between 2003-2013 in the JRME.

- A questionnaire was the primary method of data collection.

As the goal of this review was to identify research that used questionnaires as the primary data collection tool in the JRME, papers were immediately excluded if they used questionnaires to gather demographic data or collect participant open-responses (N = 12). This decision was made because the reliability and validity of open-responses is inherently difficult to detect and report (Fowler & Cosenza, 2008). To illustrate, researchers define constructs they want to measure. The degree of association between the construct and the respondent answers is the way researchers know how well the question has been designed. Open-response items in questionnaires can provide useful information to researchers; however, for the purposes of this study, information about questionnaire development including reliability and validity was necessary.

I chose to focus on the characteristics of high-quality, rigorous survey research, as survey methodology researchers in the social sciences identified a clear need to critically examine studies using questionnaires. Therefore, papers were not included if full information about the questionnaire development and administration as well as sample design was not reported. Seventeen papers were excluded because entire sections (e.g., questionnaire development, administration components) of the method were not reported.

Data Analysis

Forty-five papers met the initial review criteria and were analyzed to determine the population, questionnaire development, sample, sample design, administration, and rates of response (See Appendix A for a bibliographic index of the 45 studies). I focused on these characteristics, as they were important to critiquing the rigor of the studies. Dichotomous variables were created from these characteristics and assembled into a researcher codebook to facilitate the data extraction process. Following the data extraction procedure using the codebook, a data file was created using the Statistical Package for the Social Sciences (SPSS 22.0 for Mac). Finally, I checked for frequency on the variables to indicate outliers in the data.

Results

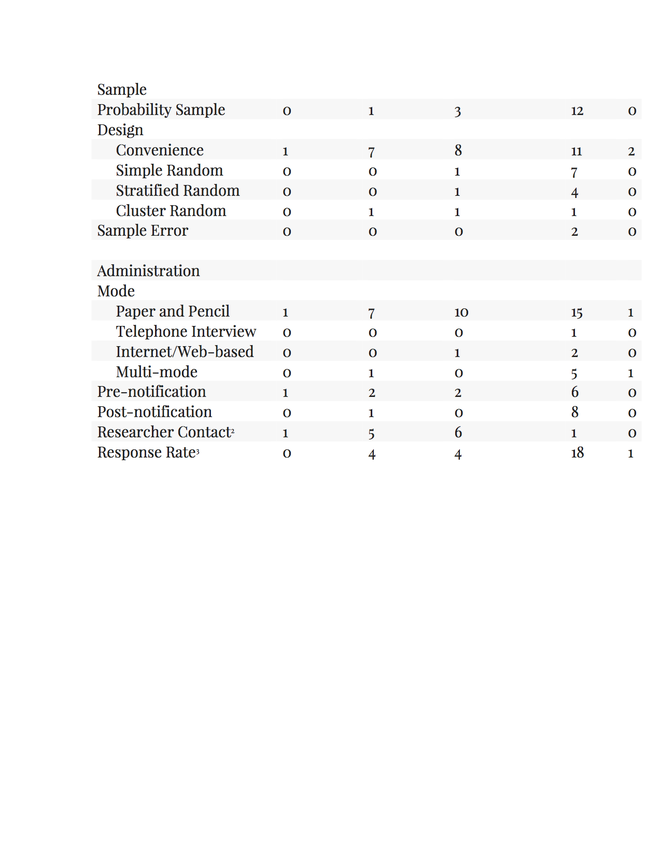

The studies (N = 45) included in this critical review met the characteristics for high-quality, rigorous research, as established by social science survey methodologists. Studies using questionnaires as a primary data collection tool appeared in the JRME regularly from 2003 – 2013 with the greatest number of studies (17.8%) published in 2012 and the least studies (4.4%) published in 2005. On average, one study utilizing a questionnaire appeared per issue of the JRME during this time period. Populations of interest to researchers included pre-school aged children (1 study), school-aged youth (8 studies), pre-service music majors (11 studies), adults/in-service music teachers (23 studies), and multi-age populations (2 studies). Studies reported focusing on all music subjects (21 studies), instrumental music (17 studies), vocal and general music (5 studies) and two studies investigating administrators. With 40% of studies in this review examining in-service teachers, this population is well researched in the field of music education. Table II describes the frequency data of studies selected for review. Variables are reported and sorted by population of interest.

[Notes: 1Only the number of studies reporting evidence of the variables are listed in Table II. For one study, if a variable was present a “1” appears; however, if a “0” appears then the researchers did not report or there was no evidence of that variable present in the study. 2The researcher was present with the participants at the time of data collection. 3Response rates percentages were overtly listed or enough information was available to calculate the response rate percentage.]

Discussion

These studies represent great diversity in terms of their questionnaire development and administration as well as sample design. While such range makes it difficult to draw specific conclusions about the methods and components most likely to produce results that are generalizable to a greater population, the results in Table II are consistent with developing views regarding research on the use of questionnaires as a primary data collection tool in the social sciences (Groves, 2009). Primary findings within questionnaire development, sample design, and questionnaire administration are organized and discussed throughout the rest of this section of the paper. Each topic is broadly discussed with relation to the findings from this inquiry. Then, exemplars from the sample of publications are presented to illustrate the characteristic of high-quality research.

Questionnaire Development in Music Education

The broad use of questionnaires in music education has been a popular method of collecting data and may appear straightforward, but can involve a multifaceted set of procedures. First, defining what is meant by a survey or a questionnaire is necessary for researchers in music education to properly use this method of data collection. Survey methodology is a broad field of study where researchers become survey methodologists. Throughout the examination of the JRME from 2003-2013, it is clear that researchers in music education sometimes misuse the term survey in their research. Future research in music education needs to make a distinction about how the word survey is intended. In most JRME publications from 2003-2013, it was difficult to understand the intention of researchers in music education unless explicitly stated in the article. From this investigation, the keyword search revealed many researchers used the word survey in their studies; however, Groves (2009) suggested that the term survey (treated as a verb) is an overarching term that can be further specified. The term survey may be used as a noun or a verb. For example, two methods of surveying have been identified in social science research. The first is the use of questionnaires and the second is the use of interviews (e.g., face-to-face, telephone). Both of these methods have been identified in music education research, but the purpose of this investigation was to examine the use of questionnaires as a primary data collection tool. This explains why dozens of studies were initially excluded from this investigation. Future research in music education may need to clarify how data is collected in a study utilizing questionnaires.

The majority of the studies in this investigation (n = 24) involved the creation of original tools for collecting or measuring participant behaviors and/or perceptions. Procedures associated with creating questionnaires indicate that evidence of questionnaire reliability and validity are a required presence in a manuscript (Stapleton, 2010). Several procedures for demonstrating reliability and validity exist; however, if the procedures were not included in the manuscript, then they were not counted in Table II. Several researchers in music education provided ample evidence of thorough questionnaire development. For example, Valerio, Reynolds, Morgan, and McNair (2012) examined the construct validity of the Children’s Music-Related Behavior Questionnaire (CMRBQ), which is “… an instrument designed for parents to document music-related behaviors about their children and themselves” (p. 186). The researchers reported previous research on the development of this questionnaire prior to the construct validity testing. Procedures for construct validity testing included model testing, which included confirmatory factor analysis (CFA) to assess the goodness of model-data and maximum likelihood estimation (MLE). The authors disclosed details instructing the interpretation of the results. Another example of thorough questionnaire development in this sample of studies from the JRME was Jutras’ (2006) investigation of the benefits of adult piano study as self-reported by selected adult piano students (N = 711). Evidence of reliability and validity exists in the article along with cognitive interviewing procedures as a means of increasing the construct validity of the questionnaire. Additionally, Jutras piloted the questionnaire with an adequate sample of convenience before collecting data on a national sample. Both of these studies highlight the rigor neccessary when using a questionnaire as a primary data collection tool in social science research.

Creating original questionnaires through rigorous development procedures can be a time-consuming process. The forthcoming studies included in the discussion section utilized existing questionnaires or modified an existing questionnaire for use in their studies. Russell and Austin (2010) investigated assessment practices of secondary music teachers as a follow-up study to Austin (2003). Details of the reliability and validity were reported for The Secondary School Music Assessment Questionnaire (SSMAQ) used in this study. Additionally, Russell and Austin reported the piloting procedure and questionnaire development for both the original study and this follow-up study. This information was used to confirm its use during the current study. This study is an example of how a pre-existing questionnaire was piloted to demonstrate validity with the intended population. Whether researchers use a pre-existing questionnaire, modify an existing questionnaire, or develop a new questionnaire it is necessary to demonstrate evidence of reliability and validity of the questionnaire in the article. Researchers in music education replicating a study utilizing a pre-existing questionnaire are encouraged to pilot the data collection tool to confirm reliability and validity with the population of interest. Further research in music education may need to devote entire investigations to examining the reliability and validity of questionnaires (see Valerio et al., 2012) as to mirror this established practice from research in the social sciences.

Designing a Sample for using Questionnaires in Music Education

Data collected from a sample population through using questionnaires are meant to generalize to a greater population (Stapleton, 2010). This investigation of music education studies using questionnaires as a primary data collection tool in the JRME highlighted a majority of studies (n = 29) using a non-probability sample. Since researchers in music education included in this study have predominantly used questionnaires examining a non-probability sample, their results must be interpreted cautiously. However, this investigation did reveal a number of investigators who reported using a probability sample and acknowledging a specific sample design. One such study (Hopkins, 2013) investigated teachers’ beliefs and practices regarding teaching tuning in elementary and middle school group string classes. In this study, Hopkins provided ample evidence by creating a high-quality, researcher-developed questionnaire. The researcher identified a target population with strategies for simple random sampling. Thorough details for selecting the sample and relevant citations grounded this procedure.

Another sampling procedure revealed in the current study was the use of a pre-existing population or data set. Elpus and Abril (2011) examined high school music ensemble students in the United States through the use of data collected from the Education Longitudinal Study (ELS) of 2002. The researchers reported the ELS questionnaire sampling and questionnaire administration procedures necessary to select a complex cluster sampling design. Furthermore, Elpus and Abril documented how the ELS was sampled in their study and what steps were necessary to overcome the inherent violation of the independent observation assumption present in the ELS data set. When sampling a population or pre-existing data set nested in schools, a clustered sampling procedure is recommended (Elpus & Abril, 2011). Researchers in music education are also encouraged to use a probability sampling procedure with future studies, so findings may be generalized to a greater population.

Sampling error is recognized by survey researchers in social science as a necessary component to report in any study (Stapleton, 2010). Based on the formula from Classical Measurement Theory (CMT), X = T + E[2], error will always exist when measurement is utilized. Indeed, sampling error will always be present when using questionnaires as a measurement tool. Therefore, it is necessary to report the error. Sampling error can be conceptualized as the variance or deviance between a sample and population of interest. Although sampling error is important in the social sciences, most studies in this investigation demonstrated little evidence of reporting sampling error. Only four studies included this information (Hopkins, 2013, Russell & Austin, 2010; Russell, 2012; Valerio et al., 2012). Hopkins (2013) reported calculating the necessary sample size in to “… provide adequate coverage and to minimize sampling error” (p. 100). The issue of nonrespondent bias was minimized because the researcher tracked geographic information about the respondents and nonrespondents to confirm the current sample to prior research. Percentage of error and confidence interval are necessary components of reporting sampling error as to appropriately inform the reader how to interpret the study results.

Questionnaire Administration in Music Education

Findings from this investigation demonstrate a wide range of techniques and strategies used in administering questionnaires in music education research. Overwhelmingly, the modus operandi for researchers in music education has been to collect data with a paper-pencil format (34 studies). Miksza, Roeder, and Biggs (2010) administered a questionnaire to understand Colorado band directors’ opinions of skills and characteristics important to successful music teaching. The researchers decided to distribute their survey as both paper-pencil and electronic questionnaires to see which modality elicited a greater questionnaire response rate. Details outlining the procedures for administering a multi-modal format for administering questionnaires were included and discussed in the article. Miksza and colleagues found that Colorado band directors responded more with the electronic questionnaire (M = 36%) then with the paper-pencil version (M = 17%). Research in the social sciences is not conclusive when recommending a mode of questionnaire administration, rather selecting a mode of administration is dependent on the research questions (Dillman, Eltinge, Groves, & Little, 2002).

Other variables impact questionnaire administration and ultimately the response rate. These include participant pre-notification and post-notification procedures. Pre-notification is when a participant is notified before being invited to take the questionnaire. Post-notifications are sent to participants who have been invited to take the questionnaire but have yet to respond. These procedures typically yield a higher overall response rate in questionnaire administration. Miksza and colleagues (2010) found that paper-pencil questionnaires with post-notification yielded over 75% more responses than paper-pencil questionnaires without post-notification. However, the same result was not demonstrated with electronic questionnaire administration.

Response rate and nonresponse rates are critical components for inclusion in any article submitted for publication (Stapleton, 2010). This investigation of studies selected from the JRME highlighted that even high-quality, rigorous studies sometimes do not include critical information about the questionnaire administration for music education researchers. Ideally, this information is presented in a clear manner so that readers do not have to locate the necessary information and calculate the percentage, as was the case for three studies included in this investigation. Despite nonresponse rates being recognized as an important component in social science survey research (Dillman et al., 2002), rarely do researchers in music education report this information. This variable was not included on Table II as only two studies reported this information. Information about who is not responding to the questionnaire is valuable information for researchers. This established practice is a necessary component of social science survey research and may be considered for future studies in music education.

New Directions and Recommendations for Future Research

The current study systematically identified and examined 45 studies from the JRME’s last decade of published research as a means of exploring the use of questionnaires in music education. As a result of the research review and data extraction process, inconsistencies with reporting information collected from the studies were discovered. Overall, 29 of 74 studies were excluded from review because of these inconsistencies in reporting information necessary to complete the data extraction for the review process (n = 17) or used questionnaires other than a primary data collection tool (n = 12). Questionnaires can be used to collect data in non-survey research. For example, in an experimental study, a participant might use a questionnaire to respond to a musical stimulus. More research is needed to investigate studies in other peer-reviewed journals to inform future studies reporting the use of questionnaires as the primary data collection tool in both survey and non-survey research.

Results from this study suggest that deficiencies in specification of questionnaire development, sample selection and design, and administration fidelity may have contributed to limitations in high-quality, rigorous survey research within the field of music education. Understanding that submission guidelines (e.g., word or page count) may be a limitation, procedures for reporting sample design, questionnaire development and administration method need to be in place by editorial review committees. Future research in music education would benefit in adopting standardized procedures for reporting survey design, sampling, and administration in a manuscript (See Table I). In fact, Groves (2009) recommend questionnaire reliability, validity, sampling procedure and design, and administration should be noted as part of the study method. Furthermore, Stapleton (2010) offered standardized procedures in the social sciences that are available for adoption by researchers in music education (See Table I). Models of exemplar studies utilizing questionnaires as a primary data collection tool have been identified throughout the current examination and may be used as examples of rigorous questionnaire research within the field of music education.

Using a standardized procedure for reviewing manuscripts that utilize a questionnaire conforms the research in music education with the broader social science community. Occasionally, researchers in music education will use a measure from outside of the field and conduct research with a music population. The practice of adopting questionnaires from other disciplines into music education research is encouraging. However, the ramifications of using a poorly designed questionnaire may have an interdisciplinary impact. For instance, despite the possibility of the measure being known as a reliable and valid measure, appropriate piloting procedures should be conducted to verify the tool with a sample of the target population. If the piloting procedures are not conducted, then the results of the investigation may not advance knowledge of the field. This is a primary concern for researchers. The primary mission of researchers and scientists is to create new knowledge that builds on an extant knowledge base.

An additional ramification of poorly designed questionnaire research or inadequate reporting of well-designed questionnaire research is that populations may get overlooked in the research. For instance, populations include those groups of people clustered by geography, shared interests, chronological age, and many other derivations. When the process of sampling and administration are considered, detailed information is needed so that another researcher could reasonably replicate the study or clearly understand the target population. This information assists with building a profile of the research topic being investigated. Understanding which populations have been investigated and how the research was conducted can guide future researchers and illuminate populations that are silenced from the research.

The United States is not a homogeneous society and details in sample description should be detailed. Elpus and Abril (2011) collected demographic information to make clear how unique a sample can be. For instance, in building a demographic profile of high school music students in the United States, these researchers where able to make descriptive generalizations about the demographic of music classrooms. As time goes on and researchers begin to more accurately capture their sample, stakeholders in music education research will be able to connect the historical findings of studies utilizing questionnaires to their current population. This means that longitudinal trends in data may be able to guide researchers how to best serve all musical learners. Future studies utilizing questionnaires as a primary data collection tool must thoroughly document the demographic population being studied and should be directed towards populations that are underrepresented in the literature, such as school-age populations. Otherwise, the school-age populations will remain underrepresented and the next generation of researchers in music education may not have the demographic information to proceed and extend past research.

Limitations of the Current Study

Despite the systematic procedure for selecting the studies in this review, the results found in Table II may not be free from error. No additional researchers were used to verify the results of this study and therefore, each study required thorough screening for the data extraction process. Studies that were not included in this study or not designated as exemplars should not be dismissed or considered poorly designed. They simply did not meet the criteria for inclusion in this study.

When a systematic review is conducted, criteria are selected to showcase the purpose of the study. The systematic review process is subject to research bias (Petticrew, 2001). Research bias occurs during the design, measurement, sampling, procedure, or choice of problem being studied and is not acknowledged by the researcher (Cooper, 1998). In this study, the limiting list of criteria was purposefully used to understand how studies published in the Journal of Research in Music Education (JRME), that use questionnaires as a data collection tool, conform to the expectations established by the social science research community.

This review procedure is relatively new to social science research (Petticrew, 2001). Systematic reviews are common in the fields of health, medicine, and law. The process of how the study inclusion criteria is determined provides an outline of the review process in the methodology to make replication possible. This does not suggest that the study is without bias.

References

Abril, C. R., & Gault, B. M. (2006). The state of music in the elementary school: The principal’s perspective. Journal of Research in Music Education, 54, 6–20. doi:10.1177/002242940605400102

Austin, J. R. (2003). Hodgepodge grading in high school music classes: Is there accountability for artistic achievement? Paper presented at the annual meeting of the American Educational Research Association, Chicago, IL.

Beatty, P. C., & Willis, G. B. (2007). Research synthesis: The practice of cognitive interviewing. Public Opinion Quarterly, 71(2), 287–311. doi:10.1093/poq/nfm006

de Leeuw, E. D., Hox, J. J., & Dillman, D. A. (2008). International handbook of survey methodology. New York, NY: Taylor & Francis.

De Vaus, D. A. (1995). Surveys in social research. St. Leonards, NSW, Australia: Allen & Unwin.

Dillman, D. A. (2000). Mail and internet surveys: The tailored design method (Vol. 2). New York, NY: Wiley.

Dillman, D. A. (2002). Presidential address: Navigating the rapids of change: Some observations on survey methodology in the early twenty-first century. Public Opinion Quarterly, 66, 473-494.

Dillman, D. A., Eltinge, J. L., Groves, R. M., & Little, R. J. A. (2002). Survey nonresponse in design, data collection, and analysis. In R. M. Groves, D. A. Dillman, J. L., Eltinge, & R. J. A. Little (Eds.), Survey nonresponse (pp. 1-26). New York, NY: Wiley.

Dillman, D. A., & Christian, L. M. (2005). Survey mode as a source of instability in responses across surveys. Field methods, 17(1), 30-52.

Dillman, D. A. (2008). The logic and psychology of constructing questionnaires. In E. de Leeuw, J. J. Hoop, & D. A. Dillman (Eds.), International handbook of survey methodology (pp. 161-175). New York, NY: Psychology Press.

Elpus, K., & Abril, C. R. (2011). High school music ensemble students in the United States: A demographic profile. Journal of Research in Music Education, 59, 128–145. doi:10.1177/0022429411405207

Fink, A. (2005). Conducting research literature reviews: From the internet to paper (2nd ed.). Thousand Oaks, CA: Sage.

Fowler, F. J., & Cosenza, C. (2008). Writing effective questions. In E. de Leeuw, J. J. Hoop, & D. A. Dillman (Eds.), International handbook of survey methodology (pp. 136-160). New York, NY: Psychology Press.

Groves, R. M. (2009). Survey methodology. Hoboken, NJ: Wiley.

Hemsley-Brown, J., & Sharp, C. (2003). The use of research to improve professional practice: A systematic review of the literature. Oxford Review of Education, 29(4), 449-470.

Hobson, A. J., & Sharp, C. (2005). Head to head: A systematic review of the research evidence on mentoring new head teachers. School Leadership and Management 25 (1), 25-42.

Hopkins, M. T. (2013). Teachers’ practices and beliefs regarding teaching tuning in elementary and middle school group string classes. Journal of Research in Music Education, 61, 97–114. doi:10.1177/0022429412473607

Jutras, P. J. (2006). The benefits of adult piano study as self-reported by selected adult piano students. Journal of Research in Music Education, 54, 97–110. doi:10.1177/002242940605400202

Miksza, P., & Johnson, E. (2012). Theoretical frameworks applied in music education research. Bulletin of the Council of Research in Music Education, 193, 7-30.

Miksza, P., Roeder, M., & Biggs, D. (2009). Surveying Colorado Band Directors’ Opinions of Skills and Characteristics Important to Successful Music Teaching. Journal of Research in Music Education, 57, 364–381. doi:10.1177/0022429409351655

Overland, C. (2014). Statistical power in the Journal of Research in Music Education: 2000-2010. Paper presented at the annual meeting of the American Educational Research Association, Philadelphia, PA.

Petticrew, M. (2001). Systematic reviews from astronomy to zoology: Myths and misconceptions. British Medical Journal, 322(7278), 98-101.

Russell, J. A., & Austin, J. R. (2010). Assessment practices of secondary music teachers. Journal of Research in Music Education, 58, 37–54. doi:10.1177/0022429409360062

Schmidt, C. P., & Zdinski, S. F. (1993). Cited quantitative research in articles in music education research journals, 1975-1990: A content analysis of selected studies. Journal of Research in Music Education, 41, 5-18.

Sims, W. L. (2014, April). Publishing your music education research. Paper presented at the meeting of the National Association for Music Education, St. Louis, MO.

Stapleton, L. M. (2010). Survey sampling, administration, and analysis. In G. R. Hancock, & R. O. Mueller (Eds.), The reviewer’s guide to quantitative methods in the social sciences (pp. 397-411). New York, NY: Routledge.

Valerio, W. H., Reynolds, A. M., Morgan, G. B., & McNair, A. A. (2012). Construct validity of the Children’s Music-Related Behavior Questionnaire. Journal of Research in Music Education, 60, 186–200. doi:10.1177/0022429412444450

Yarbrough, C. (1984). A content analysis of the Journal of Research in Music Education, 1953-1983. Journal of Research in Music Education, 32, 213-222.

Yarbrough, C. (2002). The first 50 years of the Journal of Research in Music Education: A content analysis. Journal of Research in Music Education, 50, 276-279.

Appendix A

Bibliographic Index of Studies Reviewed

Abeles, H. (2004). The effect of three orchestra/school partnerships on students’ interest in instrumental music instruction. Journal of Research in Music Education, 52, 248-263. doi:10.2307/3345858

Abeles, H. (2009). Are musical instrument gender associations changing? Journal of Research in Music Education, 57, 127–139. doi:10.1177/0022429409335878

Abril, C. R., & Gault, B. M. (2006). The state of music in the elementary school: The principal’s perspective. Journal of Research in Music Education, 54, 6–20. doi:10.1177/002242940605400102

Abril, C. R., & Gault, B. M. (2008). The state of music in secondary schools: The principal’s perspective. Journal of Research in Music Education, 56, 68–81. doi:10.1177/0022429408317516

Bauer, W. I., Reese, S., & McAllister, P. A. (2003). Transforming music teaching via technology: The role of professional development. Journal of Research in Music Education, 51, 289. doi:10.2307/3345656

Boucher, H., & Ryan, C. A. (2011). Performance stress and the very young musician. Journal of Research in Music Education, 58, 329–345. doi:10.1177/0022429410386965

Brophy, T. S. (2005). A longitudinal study of selected characteristics of children’s melodic improvisations. Journal of Research in Music Education, 53, 120–133. doi:10.1177/002242940505300203

Byo, J. L., & Cassidy, J. W. (2005). The role of the string project in teacher training and community music education. Journal of Research in Music Education, 53, 332–347. doi:10.1177/002242940505300405

Campbell, M. R., & Thompson, L. K. (2007). Perceived concerns of preservice music education teachers: A cross-sectional study. Journal of Research in Music Education, 55, 162–176. doi:10.1177/002242940705500206

Custodero, L. A., & Johnson-Green, E. A. (2003). Passing the cultural torch: Musical experience and musical parenting of infants. Journal of Research in Music Education, 51, 102. doi:10.2307/3345844

Devroop, K. (2012). The occupational aspirations and expectations of college students majoring in jazz studies. Journal of Research in Music Education, 59, 393–405. doi:10.1177/0022429411424464

Elpus, K., & Abril, C. R. (2011). High school music ensemble students in the United States: A demographic profile. Journal of Research in Music Education, 59, 128–145. doi:10.1177/0022429411405207

Fredrickson, W. E. (2007). Music majors’ attitudes toward private lesson teaching after graduation: A replication and extension. Journal of Research in Music Education, 55, 326–343. doi:10.1177/0022429408317514

Fredrickson, W. E., Geringer, J. M., & Pope, D. A. (2013). Attitudes of string teachers and performers toward preparation for and teaching of private lessons. Journal of Research in Music Education, 61, 217–232. doi:10.1177/0022429413485245

Hancock, C. B. (2008). Music teachers at risk for attrition and migration: An analysis of the 1999-2000 schools and staffing survey. Journal of Research in Music Education, 56, 130–144. doi:10.1177/0022429408321635

Hancock, C. B. (2009). National estimates of retention, migration, and attrition: A multiyear comparison of music and non-music teachers. Journal of Research in Music Education, 57, 92–107. doi:10.1177/0022429409337299

Herbst, A., de Wet, J., & Rijsdijk, S. (2005). A survey of music education in the primary schools of South Africa’s Cape Peninsula. Journal of Research in Music Education, 53, 260–283. doi:10.1177/002242940505300307

Hopkins, M. T. (2013). Teachers’ practices and beliefs regarding teaching tuning in elementary and middle school group string classes. Journal of Research in Music Education, 61, 97–114. doi:10.1177/0022429412473607

Isbell, D. S. (2008). Musicians and teachers: The socialization and occupational identity of preservice music teachers. Journal of Research in Music Education, 56, 162–178. doi:10.1177/0022429408322853

Jutras, P. J. (2006). The benefits of adult piano study as self-reported by selected adult piano students. Journal of Research in Music Education, 54, 97–110. doi:10.1177/002242940605400202

Kuehne, J. M. (2007). A survey of sight-singing instructional practices in Florida middle-school choral programs. Journal of Research in Music Education, 55, 115–128. doi:10.1177/002242940705500203

Latimer, M. E., Bergee, M. J., & Cohen, M. L. (2010). Reliability and perceived pedagogical utility of a weighted music performance assessment rubric. Journal of Research in Music Education, 58, 168–183. doi:10.1177/0022429410369836

Lee Nardo, R., Custodero, L. A., Persellin, D. C., & Fox, D. B. (2006). Looking back, looking forward: A report on early childhood music education in accredited American preschools. Journal of Research in Music Education, 54, 278–292. doi:10.1177/002242940605400402

MacIntyre, P. D., Potter, G. K., & Burns, J. N. (2012). The socio-educational model of music motivation. Journal of Research in Music Education, 60, 129–144. doi:10.1177/0022429412444609

Madsen, C. K. (2004). A 30-year follow-up study of actual applied music practice versus estimated practice. Journal of Research in Music Education, 52, 77-88. doi:10.2307/3345526

Marjoribanks, K., & Mboya, M. (2004). Learning environments, goal orientations, and interest in music. Journal of Research in Music Education, 52, 155. doi:10.2307/3345437

Matthews, W. K., & Kitsantas, A. (2007). Group cohesion, collective efficacy, and motivational climate as predictors of conductor support in music ensembles. Journal of Research in Music Education, 55, 6–17. doi:10.1177/002242940705500102

May, L. F. (2003). Factors and abilities influencing achievement in instrumental jazz improvisation. Journal of Research in Music Education, 51, 245-258. doi:10.2307/3345377

McKeage, K. M. (2004). Gender and participation in high school and college instrumental jazz ensembles. Journal of Research in Music Education, 52, 343–356. doi:10.1177/002242940405200406

McKoy, C. L. (2012). Effects of selected demographic variables on music student teachers’ self-reported cross-cultural competence. Journal of Research in Music Education, 60, 375–394. doi:10.1177/0022429412463398

Miksza, P. (2006). Relationships among impulsiveness, locus of control, sex, and music practice. Journal of Research in Music Education, 54, 308–323. doi:10.1177/002242940605400404

Miksza, P. (2012). The development of a measure of self-regulated practice behavior for beginning and intermediate instrumental music students. Journal of Research in Music Education, 59, 321–338. doi:10.1177/0022429411414717

Miksza, P., Roeder, M., & Biggs, D. (2009). Surveying Colorado band directors’ opinions of skills and characteristics important to successful music teaching. Journal of Research in Music Education, 57, 364–381. doi:10.1177/0022429409351655

Nabb, D., & Balcetis, E. (2010). Access to music education: Nebraska band directors’ experiences and attitudes regarding students with physical disabilities. Journal of Research in Music Education, 57, 308–319. doi:10.1177/0022429409353142

Parkes, K. A., & Jones, B. D. (2012). Motivational constructs influencing undergraduate students’ choices to become classroom music teachers or music performers. Journal of Research in Music Education, 60, 101–123. doi:10.1177/0022429411435512

Reardon MacLellan, C. (2011). Differences in Myers-Briggs Personality Types among high school band, orchestra, and choir Members. Journal of Research in Music Education, 59, 85–100. doi:10.1177/0022429410395579

Rickels, D. A., Brewer, W. D., Councill, K. H., Fredrickson, W. E., Hairston, M., Perry, D. L., … Schmidt, M. (2013). Career influences of music education audition candidates. Journal of Research in Music Education, 61, 115–134. doi:10.1177/0022429412474896

Ritchie, L., & Williamon, A. (2011). Primary school children’s self-efficacy for music learning. Journal of Research in Music Education, 59, 146–161. doi:10.1177/0022429411405214

Russell, J. A. (2008). A discriminant analysis of the factors associated with the career plans of string music educators. Journal of Research in Music Education, 56, 204–219. doi:10.1177/0022429408326762

Russell, J. A. (2012). The occupational identity of in-service secondary music educators: Formative interpersonal interactions and activities. Journal of Research in Music Education, 60, 145–165. doi:10.1177/0022429412445208

Russell, J. A., & Austin, J. R. (2010). Assessment practices of secondary music teachers. Journal of Research in Music Education, 58, 37–54. doi:10.1177/0022429409360062

Sichivitsa, V. O. (2003). College choir members’ motivation to persist in music: Application of the Tinto Model. Journal of Research in Music Education, 51, 330. doi:10.2307/3345659

Sinnamon, S., Moran, A., & O’Connell, M. (2012). Flow among musicians: Measuring peak experiences of student performers. Journal of Research in Music Education, 60, 6–25. doi:10.1177/0022429411434931

Strand, K. (2006). Survey of Indiana music teachers on using composition in the classroom. Journal of Research in Music Education, 54, 154–167. doi:10.1177/002242940605400206

Teachout, D. J. (2004). Incentives and barriers for potential music teacher education doctoral students. Journal of Research in Music Education, 52, 234. doi:10.2307/3345857

Valerio, W. H., Reynolds, A. M., Morgan, G. B., & McNair, A. A. (2012). Construct validity of the Children’s Music-Related Behavior Questionnaire. Journal of Research in Music Education, 60, 186–200. doi:10.1177/0022429412444450

Wehr-Flowers, E. (2006). Differences between male and female students’ confidence, anxiety, and attitude toward learning jazz improvisation. Journal of Research in Music Education, 54, 337–349. doi:10.1177/002242940605400406

[1] Beatty and Willis (2007) described cognitive interviewing as “…the administration of draft survey questions while collecting additional verbal information about the survey responses, which is used to evaluate the quality of the response or to help determine whether the question is generating the information that its author intends” (p. 287).

[2] X = observed score; T = true score; E = error associated with measurement